August 19, 2019

| Article | by Bari Friedman | Search Engine Optimization

The State of Search: July Turbulence

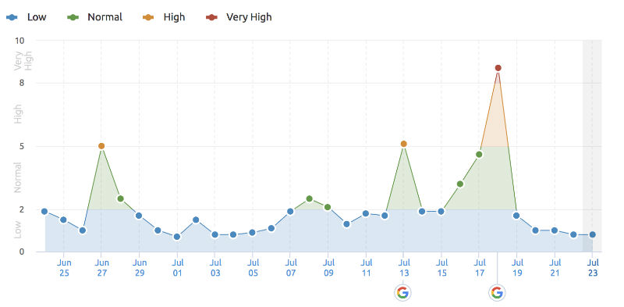

Sensors picked up large ranking shifts in the SERPs on July 13 with more significant changes on July 18, as displayed in the screenshot below. While Google did not confirm an algorithm update, we believe they did make some tweaks.

Like the recent June Core Update, we think that the shifts in July pertain to Google placing a larger emphasis on trust factors and making algorithm improvements to better assess these signals. Unlike the June update, which seemed to focus primarily on the trust of alternative medicine and health sites, we believe Google is attempting to assess trust on sites that extend beyond the alternative or natural medicine industry as the ranking fluctuations experienced in July appear to be more widespread. However, it is still too soon to determine what exactly Google has changed.

Some members of the SEO community are referring to the search volatility in July as the “Maverick” update.

Sites that experienced drops at the end of July should focus on improving E-A-T (expertise, authoritativeness, trustworthiness) signals -- specifically those pertaining to trust -- across site pages, as recommended in our June State of Search update.

Industry News Roundup

- Action Needed: Google will no longer respect “noindex” directives in robots.txt files come September 1st. Alternative measures -- like noindex robots meta tags -- should be implemented before September.

- Google has not moved all sites to mobile-first indexing yet. Check your Google Search Console account to verify if and when your site has been submitted to mobile-first indexing. People Also Ask questions appear for over 80% of organic searches now. Last year, this percentage was only 30%. Check out Moz’s MozCast Feature Graph to view the changes in major SERP features over time.

- In a recent Google Hangout, a representative from Google confirmed that linking out to other websites within your content is a great way to provide value to your users as long as you are linking to relevant and authoritative sites.

- Google added JavaScript SEO basics to its Search Developer’s guide. You can access the guide here.

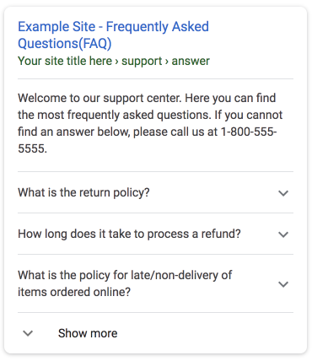

- Do you have a FAQ page on your site? Make sure you take full advantage of Google’s FAQ on structured data. When implemented correctly, your FAQ questions may appear directly in the organic search results along with expandable answers: